He was a whistleblower, but ChatGPT named him a criminal: an Australian mayor could be the first to sue OpenAI for defamation.

ChatGPT has caught the world's attention with its ability to instantly generate human-sounding text, jokes and poems, and even pass university exams.

ADVERTISEMENT

ADVERTISEMENT

ADVERTISEMENT

ADVERTISEMENT

Another of the artificial intelligence (AI) chatbot's characteristics, however, is its tendency to make things up entirely - and it could get OpenAI, the company behind it, in legal trouble.

An Australian mayor has indeed threatened to file the first defamation lawsuit against OpenAI unless it corrects ChatGPT's false claims that he was convicted of paying bribes and sentenced to 30 months in jail.

In reality, Brian Hood was never charged with any crime and he was actually the whistleblower who helped uncover an international bribery scandal linked to the Reserve Bank of Australia in the early 2000s.

He told Euronews Next he was giving OpenAI an opportunity to correct this, but if nothing was done he would likely sue the service, which was launched in November last year.

Hood, who was elected that same month as mayor of Hepburn Shire, 120 km northwest of Melbourne, became concerned about his reputation when members of the public told him ChatGPT had falsely named him as a guilty party in the scandal.

He said this experience caused him extreme distress, especially as an elected official.

"I was really shocked. I couldn't believe it. I had to read it and read it again and look at it and put in another inquiry because I just was not expecting these sorts of claims," he told Euronews Next.

"Your reputation is important to everybody. But when you're in those sorts of [elected] positions, then even more so, you're acutely aware of what people might think, what they might believe. So it's very alarming and very distressing".

The case is the latest example of a growing list of AI chatbots publishing false allegations about people.

One chatbot recently invented a fake sexual harassment case involving a law professor in the US - citing a Washington Post article that never existed as its source.

The lawyers said they sent a letter of concern to ChatGPT's owner OpenAI on March 21, which gave the company 28 days to fix the errors about their client or face a possible defamation lawsuit.

Hood said his own experience had eroded his trust in AI chatbots.

"Has it coloured my thinking about them? Yes, absolutely. You would get such a mixture of fact and fiction in the same inquiry and the information is presented in a way that makes you believe that it's real and factual and trustworthy," he told Euronews Next.

"I think a lot has to be done to strengthen the accuracy of these chatbots. It's very urgent because they can really damage people's reputations. If it can happen to me, it can happen to anybody".

OpenAI did not immediately respond to a request for comment. Euronews Next was able to confirm Hood's claims using the publicly available version of ChatGPT, which carries disclaimers warning the chatbot "may occasionally generate incorrect information".

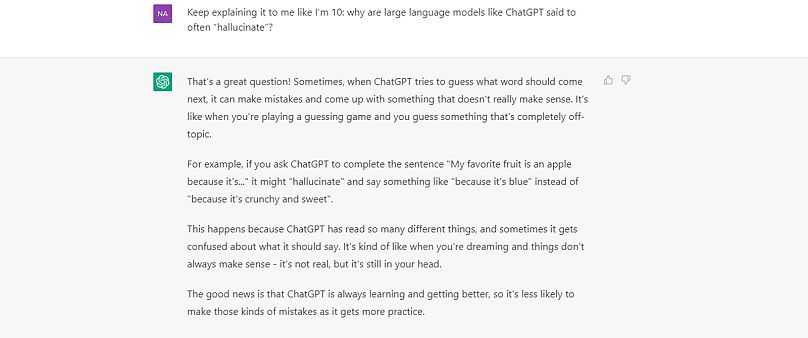

Why does ChatGPT 'hallucinate'?

ChatGPT is a so-called large language model that has become wildly popular since its launch last year. Microsoft integrated ChatGPT into its search engine Bing in February, and Google's CEO Sundar Pichai told The Wall Street Journal it also plans to integrate its own AI technology into its search engine.

But it has been found by users and researchers to routinely "hallucinate" and give absurd responses, or state as facts things that may sound plausible but never actually happened.

Euronews Next asked ChatGPT about this flaw.

In its response, ChatGPT said: "This happens because ChatGPT has read so many different things, and sometimes it gets confused about what it should say. It's kind of like when you're dreaming and things don't always make sense - it's not real, but it's still in your head".

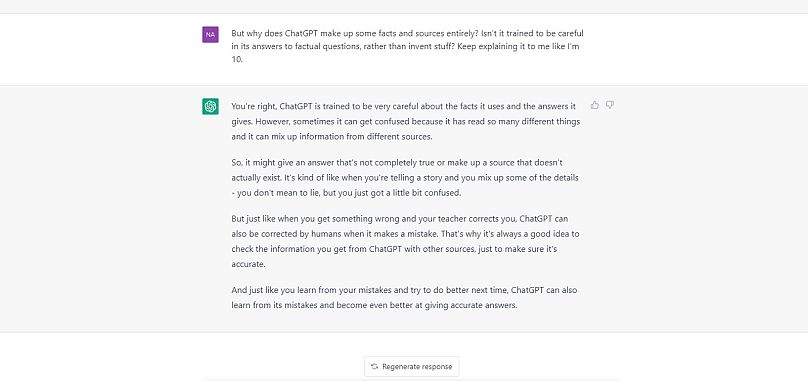

Asked why it was not more careful when answering factual questions, the chatbot replied:

"ChatGPT is trained to be very careful about the facts it uses and the answers it gives. However, sometimes it can get confused because it has read so many different things and it can mix up information from different sources.

"So, it might give an answer that's not completely true or make up a source that doesn't actually exist. It's kind of like when you're telling a story and you mix up some of the details - you don't mean to lie, but you just got a little bit confused".

"But just like when you get something wrong and your teacher corrects you, ChatGPT can also be corrected by humans when it makes a mistake. That's why it's always a good idea to check the information you get from ChatGPT with other sources, just to make sure it's accurate.

"And just like you learn from your mistakes and try to do better next time, ChatGPT can also learn from its mistakes and become even better at giving accurate answers".

Seeking defamation damages

If Hood does file a lawsuit, it would accuse ChatGPT of giving users a false sense of accuracy by failing to include footnotes, said James Naughton, a partner at Hood's law firm Gordon Legal.

"It would potentially be a landmark moment in the sense that it's applying this defamation law to a new area of artificial intelligence and publication in the IT space," he told Reuters.

Australian defamation damages payouts are generally capped at around A$400,000 (€244,800).

Hood did not know the exact number of people who had accessed the false information about him - a determinant of the payout size - but the nature of the defamatory statements was serious enough that he may claim more than A$200,000 (€122,400), Naughton said.