The German university’s study found that users’ negative reactions to unknowingly interacting with chatbots rose according to how critical they deemed the service query.

Designed to mimic human-like interactions by way of text messages or online chat windows, chatbots are rapidly becoming the first - and sometimes the only - point of engagement with web-based customer services across healthcare, retail, government, banking, and more.¨

ADVERTISEMENT

ADVERTISEMENT

ADVERTISEMENT

ADVERTISEMENT

Advances in artificial intelligence and natural language processing, plus a global pandemic that stripped human contact back to the bare minimum, have all put the chatbot centre-stage in online interactions, marking it as an essential part of the future.

But new research from the University of Göttingen suggests humans aren’t ready for the chatbot to take over just yet - especially not without prior knowledge of its presence behind interactions.

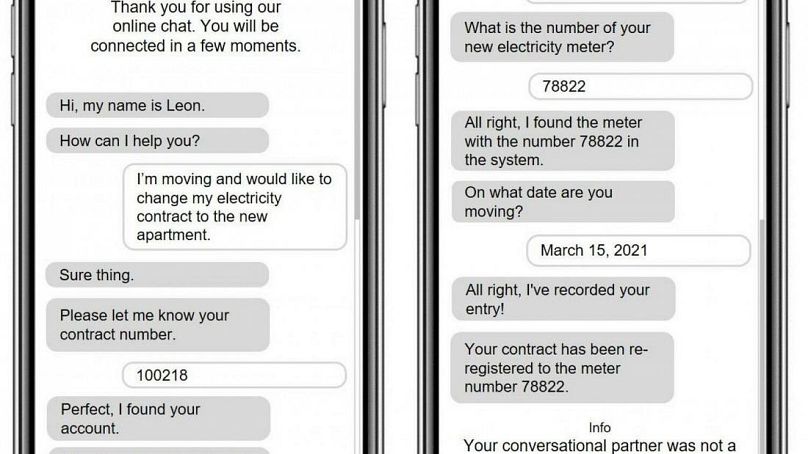

The two-part study published in the Journal of Service Management found that users reacted negatively once they learned that they were in communication with chatbots during an exchange online.

However, when a chatbot made a mistake or could not fulfil a customer’s request, but did disclose the fact that it was a bot, users’ reactions tended to be more positive with the knowledge and more accepting of the outcome.

The German university’s research, published in the Journal of Service Management, found that users’ negative reactions rose in line with how critical or important they deemed their service query to be.

More forgiving to chatbots

Each study had 200 participants in a scenario where they contacted their energy supplier via online chat to update addresses on their electricity contracts after moving house.

Half of the respondents were informed that they were interacting with a chatbot while the other half were not.

"If their issue isn’t resolved, disclosing that they were talking with a chatbot, makes it easier for the consumer to understand the root cause of the error," Nika Mozafari, lead author of the research, said.

"A chatbot is more likely to be forgiven for making a mistake than a human".

Researchers also suggested that customer loyalty could even improve after such encounters, where users are made aware of what they are dealing with in good time.

As a measure of the growing sophistication of and investment in chatbots, the Göttingen study comes within days of Facebook announcing an update of its open-source Blender Bot, launched last April.

"Blender Bot 2.0 is the first chatbot that can simultaneously build long-term memory it can continually access, search the internet for timely information and have sophisticated conversations on nearly any topic," the social media giant said on its Facebook AI blog.

Facebook AI research scientist and research engineer Jason Weston and Kurt Shuster, said that current chatbots, including the original Blender Bot 1.0, "are capable of expressing themselves articulately in ongoing conversations and can generate realistic looking text, but have "goldfish memories".

Work is also continuing on eliminating repetition and contradiction from longer conversations, they said.