Are programmes like ChatGPT bringing useful change or unknown chaos?

Since ChatGPT exploded onto the scene in November 2022, many have contemplated how it might transform life, jobs and education as we know it for better or worse.

Many, including us, are excited by the benefits that digital technology can bring to consumers.

However, our experience in testing digital products and services, shaping digital policy, and diving into consumers’ perspectives on IoT, AI, data and platforms, means the eyes of all experts are wide open to also the challenges of disruptive digitalisation.

After all, consumers should be able to use the best technology in the way they want to and not have to compromise on safety and trust.

What’s in it for consumers?

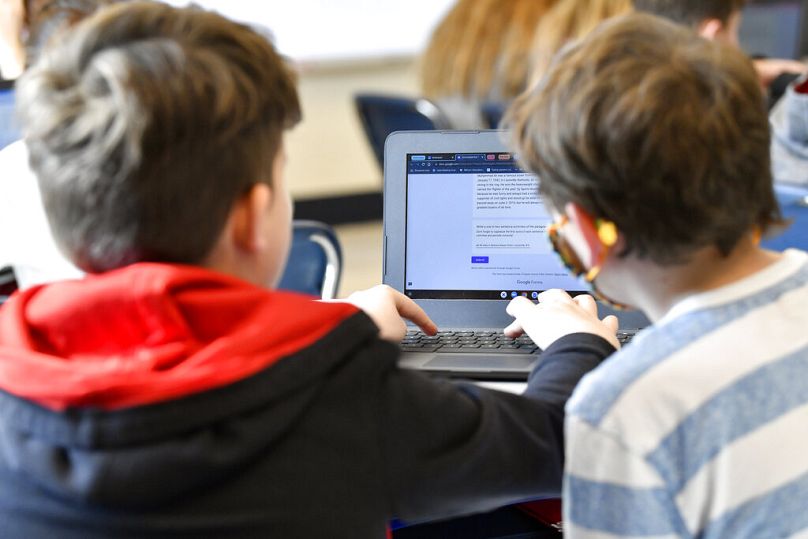

There’s plenty of positive potential in new, generative technologies like ChatGPT, including producing written content, creating training materials for medical students or writing and debugging code.

We’ve already seen people innovate consumer tasks with ChatGPT — for example, using it to write a successful parking fine appeal.

And when asked, ChatGPT had its own ideas of what it could do for consumers.

“I can help compare prices and specifications of different products, answer questions about product maintenance and warranties, and provide information on return and exchange policies…. I can also help consumers understand technical terms and product specifications, making it easier for them to make informed decisions,” it told us when we asked the question.

Looking at this, it might make you wonder if this level of service from a machine might lead to experts in all fields, including ours, becoming obsolete.

However, the rollout of ChatGPT and similar technologies has shown it still has a problem with accuracy which is, in turn, a problem for its users.

The search for truth

Let’s start by looking at the challenge of accuracy and truth in a large language model like ChatGPT.

ChatGPT has started to disrupt internet search through a rollout of the technology in Microsoft’s Bing search engine.

With ChatGPT-enabled search, results appear not as a list of links but as a neat summary of the information within the links, presented in a conversational style.

The answers can be finessed through more questions, just as if you were chatting to a friend or advisor.

This could be really helpful for a request like "can you show me the most lightweight tent that would fit into a 20-litre bike pannier".

Results like these would be easy to verify, and perhaps more crucially, if they turn out to be wrong, they would not pose a major risk to a person.

However, it's a different story when the information that is "wrong" or "inaccurate" carries a material risk of harm — for example, health or financial advice or deliberate misinformation that could cause wider social problems.

It's convincing, but is it reliable?

The problem is that technologies like ChatGPT are very good at writing convincing answers.

But OpenAI have been clear that ChatGPT has not been designed to write text that is true.

It is trained to predict the next word and create answers that sound highly plausible — which means that a misleading or untrue answer could look just as convincing as a reliable, true one.

The speedy delivery of convincing, plausible untruths through tools like ChatGPT becomes a critical problem in the hands of users whose sole purpose is to mislead, deceive and defraud.

Large language models like ChatGPT can be trained to learn different tones and styles, which makes them ripe for exploitation.

Convincing phishing emails suddenly become much easier to compose, and persuasive but misleading visuals are quicker to create.

Scams and frauds could become ever more sophisticated and disinformation ever harder to distinguish. Both could become immune to the defences we have built up.

We need to learn how to get the best of ChatGPT

Even in focusing on just one aspect of ChatGPT, those of us involved in protecting consumers in Europe and worldwide have examined multiple layers of different consequences that this advanced technology could create once it reaches users' hands.

People in our field are indeed already working together with businesses, digital rights groups and research centres to start to unpick the complexities of such a disruptive technology.

OpenAI have put safeguards around the use of the technology, but other rollouts of similar products may not.

Strong, future-focused governance and rules are needed to make sure that consumers can make the most of the technology with confidence.

As the AI Act develops, Euroconsumers’ organisations are working closely with BEUC to secure consumer rights to privacy, safety and fairness in the legislative frameworks.

In the future, we will be ready to defend consumers in court for wrongdoing caused by AI systems.

True innovation still has human interests at its core

However, there are plenty of reasons to look at the tools of the future, like ChatGPT, with optimism.

We believe that innovation can be a lever of social and economic development by shaping markets that work better for consumers.

However, true innovation needs everyone’s input and only happens when tangible benefits are felt in the lives of as many people as possible.

But we are only at the beginning of what is turning out to be an intriguing experience with these interactive, generative technologies.

It may be too early for a definitive last word, but one thing is absolutely sure: despite — and perhaps even because of ChatGPT — there will still be plenty of need for consumer protection by actual humans.

Marco Pierani is the Director of Public Affairs and Media Relations, and Els Bruggeman serves as Head of Advocacy and Enforcement at Euroconsumers, a group of five consumer organisations in Belgium, Italy, Brazil, Spain and Portugal.

At Euronews, we believe all views matter. Contact us at view@euronews.com to send pitches or submissions and be part of the conversation.