Wikipedia use remains strong, but researchers warn that AI data scraping poses challenges for the online encyclopedia’s future.

Wikipedia is, at least for now, surviving the artificial intelligence (AI) era, according to a new study by King’s College London in the United Kingdom.

ADVERTISEMENT

ADVERTISEMENT

ADVERTISEMENT

ADVERTISEMENT

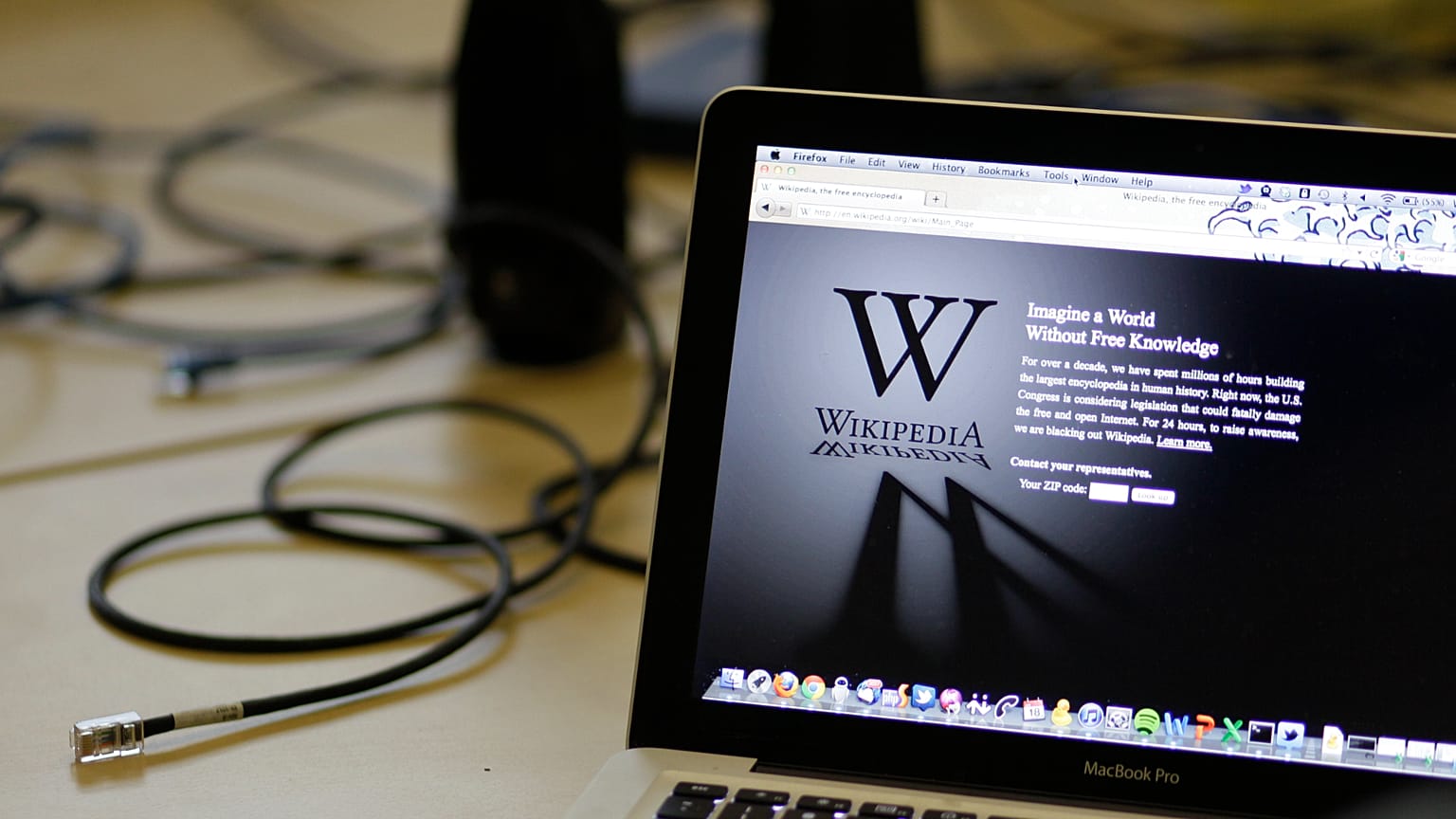

The free, internet-based encyclopedia launched in 2001 has long been the subject of doom-laden predictions, suggesting the imminent death of Wikipedia due to the rise of AI tools such as ChatGPT.

Distancing itself from these negative forecasts, the study shows that people still use Wikipedia, while noting that AI scraping–the large-scale collection of data from websites–and changes in how users access information pose challenges for the platform.

Wikipedia engagement continues

Published in the Association for Computing Machinery (ACM) Collective Intelligence, the article analysed 12 Wikipedia language editions — six with ChatGPT access and six without — from January 2021 to January 2024.

Scientists saw no drop in Wikipedia activity during the 36 months. In fact, they found an increase in page views and visitor numbers across all language editions, although the growth was smaller in languages where ChatGPT was available.

Despite this, the study found no evidence that ChatGPT reduced the number of edits or editors on Wikipedia.

The researchers also noted several limitations, such as the possibility that some users bypassed ChatGPT restrictions using virtual private networks (VPNs), and the study did not account for the popularity of ChatGPT in different countries.

These findings align with previous research on AI and Wikipedia, showing steady traffic on the free encyclopedia, while highlighting some significant challenges for the platform’s future.

Long-term threat of AI to Wikipedia

While the data challenge the idea of Wikipedia’s death, researchers also stress that the encyclopedia is facing serious difficulties.

“AI developers are letting their scrapers loose on Wikipedia to train them on high-quality data, pushing up traffic to levels where Wikipedia’s servers are struggling to keep up,” Elena Simperl, professor of computer science at King’s and co-director of the King’s Institute for Artificial Intelligence, said in a statement.

Simperl also noted that AI-generated content often draws on Wikipedia without crediting it, diverting web traffic away from the online encyclopedia.

In this regard, Simperl’s colleague and first author of the study, Neal Reeves, called for a ‘new social contract’ between AI companies and Wikipedia, one in which the platform could maintain control over its content while still allowing it to be used for AI training.

The article was published on the same day that Wikimedia Deutschland, the German branch of the foundation that runs Wikipedia, announced the Wikidata Embedding Project—a new database designed to make it easier for users, and especially AI models, to access Wikipedia’s content.

With this system AI developers will be able to use knowledge verified by Wikipedia editors, rather than relying solely on scraped pages.