A new silent speech recognition system could help deaf people communicate, while also finding applications within the army and police.

Researchers in South Korea have designed a new silent speech recognition system that can accurately recognise words by tracking facial movements.

The technology aims to help deaf people, who cannot always communicate with others using sign language. But it could also be useful to the military or the police - when radio communication is tricky because of the surrounding noise.

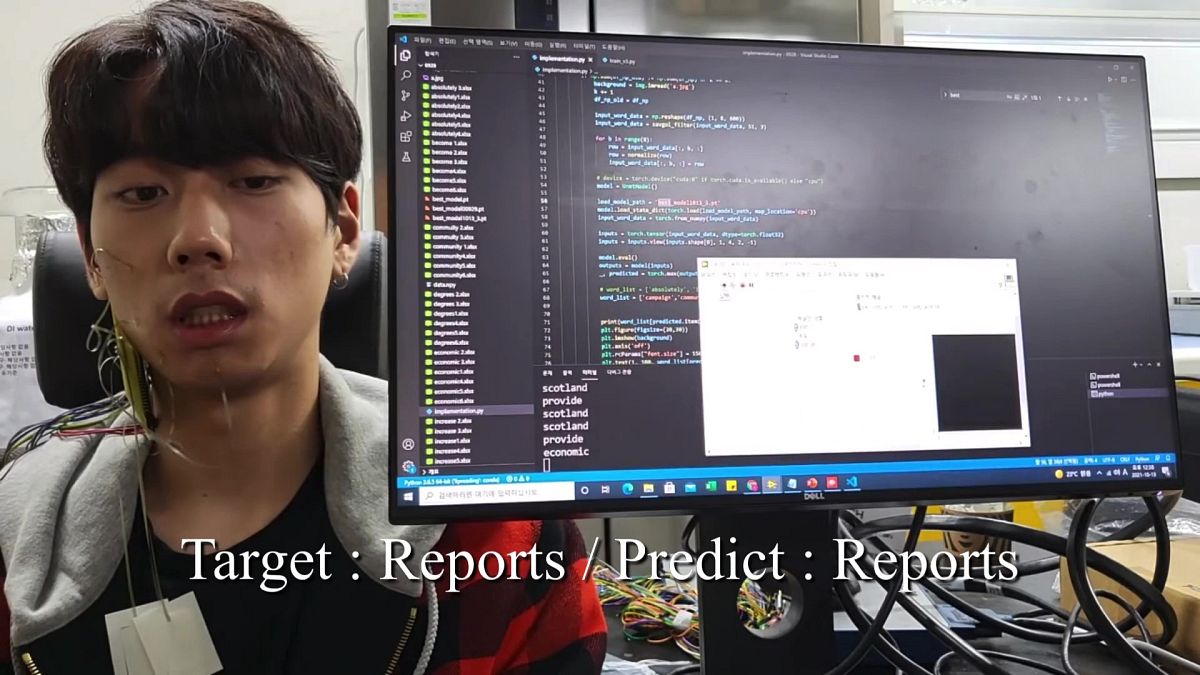

The silent speech interface relies on strain sensors to detect the skin’s expansion and contraction as a person mouths words. Using a deep learning algorithm, the new technology can track and convert these facial movements into words.

“The strain sensor attached to the face stretches and shrinks according to the skin’s stretchiness when a person speaks. And the electric properties of the strain sensors change accordingly,” Taemin Kim, of Yonsei University School of Electrical and Electronic Engineering, told Euronews Next.

The ultra-thin sensors are resistant to sweat and sebum, and the system recognised a set of 100 words with nearly 88 per cent accuracy - an unprecedented high performance, according to the team’s findings, published in Nature Communications.

Like pixels in an image

Silent speech recognition sensors are not new, but the team says the ones they’ve designed are hundreds of times smaller than existing ones – under 8 µm thick – giving their system high scalability.

In other words, they would simply need to add more of them, like pixels in an image, to better track the face’s movements and recognise more words.

"To classify and recognise more words, a higher resolution of information is needed. And that is why researchers today are trying to develop a high-resolution silent speech system that combines our wearable strain sensor with a highly integrated circuit that’s normally used in display or semiconductor production," said Kim.

"If we manage to increase the amount of information, and therefore the system can recognise more words and sentences, we expect that one day people with language disorders could have conversations in their everyday life".

Helping deaf people communicate

The World Health Organisation estimates that over 5 per cent of the world’s population – some 430 million people – have “disabling” hearing loss.

This typically leaves them relying on hearing aids, sign language or other rehabilitative therapies to communicate.

Scientists have been working on silent speech recognition technologies to enable non-acoustic communication.

However, it’s been challenging to develop a tool that can be used on the limited area of a human face in a dynamic environment.

Another method is vision recognition technology using a camera to record facial movements in high resolution, but it’s not a practical option in everyday settings.

For more on this story, watch the video in the media player above.