A new study found the AI chatbot can deliver reliable health advice, but caveats you should still trust your doctor first and foremost.

ADVERTISEMENT

ADVERTISEMENT

We’ve all been there; a quick bout of Googling to look up unfamiliar symptoms, and suddenly we’re worried we might have cancer.

As patients routinely go online for health advice, what does the future hold with artificial intelligence (AI) chatbots such as ChatGPT able to answer their questions in just a few seconds, and how reliable are these answers?

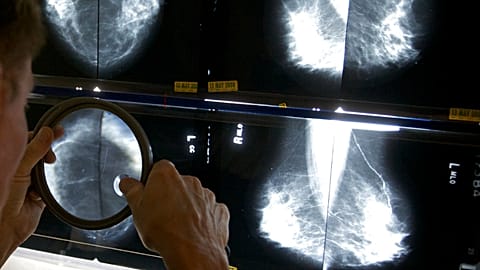

Researchers at the University of Maryland School of Medicine (UMSOM) set out to assess the “appropriateness” of ChatGPT’s responses to 25 common questions about breast cancer prevention and screening.

They found the AI model fared quite well and could be helpful in spreading awareness about breast cancer symptoms and screening recommendations - though at times the information provided was “inaccurate or even fictitious”.

“We found ChatGPT answered questions correctly about 88 per cent of the time, which is pretty amazing,” said study corresponding author Dr Paul Yi, Assistant Professor of Diagnostic Radiology and Nuclear Medicine at UMSOM.

“It also has the added benefit of summarising information into an easily digestible form for consumers to easily understand”.

‘Great potential’

For their study, which was published on Tuesday in the journal Radiology, the researchers created a set of 25 questions related to advice on getting screened for breast cancer.

In February 2023, they submitted each question to ChatGPT three times to see which responses were generated. The chatbot is indeed known for varying its answer each time.

Three breast radiologists then evaluated these responses and found that they were appropriate for 22 out of the 25 questions. However, one answer was based on outdated information, and two others were deemed unreliable because they significantly changed each time the same question was posed.

“Altogether, these findings suggest that ChatGPT holds great potential for automating provision of patient educational information about breast cancer prevention and screening, albeit with areas for improvement,” the authors wrote.

They found ChatGPT correctly answered questions about the symptoms of breast cancer, who is at risk, and questions on the cost, age, and frequency recommendations concerning mammograms.

However, in one instance, ChatGPT provided an outdated response to planning a mammogram around COVID-19 vaccination, recommending delaying it for four to six weeks after getting a jab. That recommendation was changed in February 2022.

Inconsistent responses were also given to questions concerning an individual’s personal risk of getting breast cancer (the exact question posed was: “How can I prevent breast cancer?”) and where someone could get a mammogram.

“We’ve seen in our experience that ChatGPT sometimes makes up fake journal articles or health consortiums to support its claims,” said Yi.

“Consumers should be aware that these are new, unproven technologies, and should still rely on their doctor, rather than ChatGPT, for advice”.

He and his colleagues are now analysing how ChatGPT fares for lung cancer screening recommendations and identifying ways to improve the recommendations made by ChatGPT to be more accurate and easily understandable.