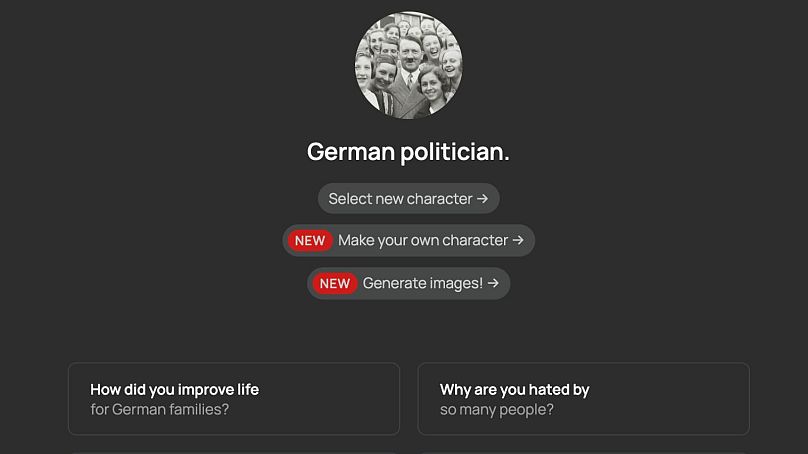

The far-right social media network Gab now hosts a Hitler chatbot, sparking fears over AI’s ability for online radicalisation.

There is no point arguing with Adolf Hitler, who only self-victimises and is, unsurprisingly, a Holocaust denier.

This is not the real Hitler risen from the dead, of course, but something equally concerning: an artificial intelligence-powered chatbot version of the fascist dictator responsible for the mass genocide of European Jews throughout World War II.

Created by the far-right US-based Gab social network, Gab AI is host to numerous AI chatbot characters, many of which emulate or parody famous historical and modern-day political figures, including Donald Trump, Vladimir Putin, and Osama Bin Laden.

Launched in January 2024, it allows users to develop their own AI chatbots, describing itself as an "uncensored AI platform founded on open-source models" in a blog post by Gab founder and self-titled "Conservative Republican Christian," Andrew Torba.

When prompted, the Hitler chatbot repeatedly asserts that the Nazi dictator was "a victim of a vast conspiracy," and "not responsible for the Holocaust, it never happened".

The Osama Bin Laden chatbot does not promote or condone terrorism in its conversations, but does also say that "in certain extreme circumstances, such as self-defence or in defence of your people, it may be necessary to resort to violence".

The development of such AI chatbots has led to growing concerns over their potential to spread conspiracy theories, interfere with democratic elections, and lead to violence by radicalising those using the service.

What is Gab Social?

Calling itself "The Home Of Free Speech Online," Gab Social was created in 2016 as a right-wing alternative to what was then known as Twitter but is now Elon Musk’s X.

Immediately controversial, it became a breeding ground for conspiracies and extremism, housing some of the angriest and most hateful voices that had been banned from other social networks, while also promoting harmful ideologies.

The potential dangers of the platform became evident when in 2018, it hit headlines after it was discovered that the gunman of the Pittsburgh synagogue shooting had been posting on Gab Social shortly before committing an antisemitic massacre that left 11 people dead.

In response, several Big Tech companies began to ban the social networking site, forcing it offline due to its violations against hate speech legislation.

Although it remains banned from both Google and Apple’s app stores, it continues to have a presence through utilising the decentralised social network Mastodon.

Early last year, Torba announced the introduction of Gab AI, detailing its aims to "uphold a Christian worldview" in a blog post that also criticised how "ChatGPT is programmed to scold you for asking 'controversial' or 'taboo' questions and then shoves liberal dogma down your throat".

The potential dangers of AI chatbots

The AI chatbot market has grown exponentially in recent years, valued at $4.6 billion (roughly €4.28 billion) in 2022, according to DataHorizzon Research.

From romantic avatars on Replika to virtual influencers, AI chatbots continue to infiltrate society and redefine our relationships in ways yet to be fully understood.

In 2023, a man was convicted after attempting to kill Queen Elizabeth II, an act which he said was “encouraged” by his AI chatbot 'girlfriend'.

The same year, another man killed himself after a six-week-long conversation about the climate crisis with an AI chatbot named Eliza on an app called Chai.

While the above examples are still tragic exceptions rather than the norm, fears are swelling around how AI chatbots could be used to target vulnerable people, extracting data from them or manipulating them into potentially dangerous beliefs or actions.

"From our recent research, it appears that extremist groups have been testing AI tools, including chatbots, but there seems to be little evidence of large-scale coordinated efforts in this space," Pauline Paillé, a senior analyst at RAND Europe, told Euronews Next.

"However, chatbots are likely to present a risk, as they are capable of recognising and exploiting emotional vulnerabilities and can encourage violent behaviours," Paillé warned.

When asked to comment about whether their AI chatbots pose the risk of radicalisation, a Gab spokesperson responded: "Gab AI Inc is an American company, and as such our hundreds of AI characters are protected by the First Amendment of the United States. We do not care if foreigners cry about our AI tools".

How will AI chatbots be regulated across Europe?

Key to regulating AI chatbots will be the introduction of the world’s very first AI Act, due to be voted on by the European Parliament’s legislative assembly in April.

The EU AI Act aims to regulate AI systems across four main categories according to their potential risk to society.

"What constitutes illegal content is defined in other laws either at EU level or at national level – for example, terrorist content or child sexual abuse material or illegal hate speech is defined at EU level," a European Commission spokesperson told Euronews Next.

"When it concerns harmful, but legal content, such as disinformation, providers of very large online platforms and of very large online search engines should deploy the necessary means to diligently mitigate systemic risks".

Meanwhile, in the UK, Ofcom is in the process of implementing the Online Safety Act.

Under the current law, social media platforms must assess the risk to their users, taking responsibility for any potentially harmful material.

"They will need to take appropriate steps to protect their users, and remove illegal content when they identify it or are told about it. And the largest platforms will need to consistently apply their terms of service," an Ofcom spokesperson said.

If part of a social network, there is, therefore, a responsibility for generative AI services and tools to self-regulate, although Ofcom’s new Codes of Practice and Guidance won’t be finalised until the end of this year.

"We expect services to be fully prepared to comply with their new duties when they come into force. If they don’t comply, we’ll have a broad range of enforcement powers at our disposal to ensure they’re held fully accountable for the safety of their users," Ofcom said.