The company took down a network of 150 groups and profiles linked to the Querdenken anti-lockdown movement, but analysis showed plenty of similar content stayed live.

Germany's federal elections took place on Sunday, drawing to a close months of debate and political campaigning.

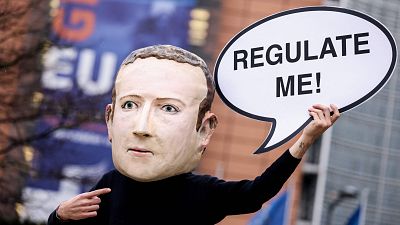

But the weeks before Sunday's vote were also marked by efforts to tackle disinformation spreading on Facebook.

A crackdown announced by the company on September 16 targeted a network of 150 groups and individuals associated with the so-called Querdenken movement in Germany, a loose confederation that includes anti-lockdown protesters, vaccine conspiracists and far-right extremists.

The move was the first deployment of Facebook's "coordinated social harm" policy, which aims to tackle organised attempts to spread disinformation and violate the company's content policies.

"The people behind this activity used authentic and duplicate accounts to post and amplify violating content, primarily focused on promoting the conspiracy that the German government’s COVID-19 restrictions are part of a larger plan to strip citizens of their freedoms and basic rights," Nathaniel Gleicher, Facebook's head of security policy, wrote in a blog post announcing the action.

Selective banning

However, a review of the content taken down from Facebook's platforms revealed that the action the social media giant took was more modest than the post announcing it suggested.

While the social media giant did take down a large amount of Querdenken-related content, plenty remained in place across its platforms.

Four deleted Querdenken posts provided to the AP news agency by Facebook were found to be similar to content that remained live on Facebook and Instagram.

They included a post falsely stating that vaccines create new viral variants and another that wished death on police that broke up violent protests against COVID restrictions.

Analysis by Reset, a UK non-profit that aims to reform online media, found that many of the comments removed by Facebook were actually written by people attempting to rebut Querdenken arguments and did not include misinformation.

"This action appears rather to be motivated by Facebook’s desire to demonstrate action to policymakers in the days before an election, not a comprehensive effort to serve the public," Reset's researchers concluded.

Simon Hegelich, a political scientist at the Technical University of Munich told The Associated Press that Facebook appeared to be using Germany as a “test case” for the new policy.

"Facebook is really intervening in German politics," Hegelich said.

"The COVID situation is one of the biggest issues in the election. They’re probably right that there’s a lot of misinformation on these sites, but nevertheless it’s a highly political issue, and Facebook is intervening in it".

Striking a balance

In his September 16 blog post, Gleicher acknowledged that the company was not banning Querdenken content outright, adding that his team was "continuing to monitor the situation and will take action if we find additional violations to prevent abuse on our platform and protect people using our services".

David Agranovich, Facebook's director of global threat disruption, told AP that the company planned to refine and expand the enforcement of its coordinated social harm policy in the future.

"This is a start. This is us extending our network disruptions model to address new and emerging threats," he said, adding that the company sought to strike a balance between permitting diverse views and preventing harmful content from spreading.

Members of the Querdenken movement reacted angrily to Facebook's decision, but many also expressed a lack of surprise.

“The big delete continues,” one supporter posted in a still-active Querdenken Facebook group, “See you on the street.”

Misinformation bias?

One issue Facebook's coordinated social harm policy faces is the fact that misinformation appears to be more popular on its platforms than the truth.

Earlier this month, researchers from New York University, Université Grenoble Alpes, and France's National Centre for Scientific Research published a preview of new findings which showed that posts from sources that regularly publish misinformation on Facebook received significantly more engagement from users than those from more trustworthy sources.

"On average, posts featuring misinformation received 4,653 interactions, compared to 773 for non-misinformation sources, whatever the political leaning of the news source," the researchers wrote.

They also noted that the data Facebook provides to researchers does not include impression data - the number of people who have seen a post - which limits their ability to make recommendations on solving the problem.

"Without knowing how many people have seen a post, it is impossible to say whether this is because Facebook’s algorithm promoted it more or if users simply engage more with content from misinformation publishers," they said.