This Valentine’s Day “AI soulmates” are giving the ick as they sell or share your personal data to third parties such as Facebook.

This Valentine’s Day, instead of having a romantic evening out with their loved ones, some people may be having virtual dates with artificial intelligence (AI) romantic chatbots.

But these AI girlfriends or boyfriends cannot be trusted with your intimate conversations or data, according to a new report.

The 11 AI romantic platforms “failed miserably” at adequately safeguarding users' privacy, security, and safety, according to the non-profit Mozilla, which runs Firefox.

The romantic apps included Replica AI, Chai and EVA AI Chat Bot & Soulmate, which along with the other eight apps account for more than 100 million downloads on the Google Play Store alone.

The report found that all but one app, EVA, may sell or share your personal data via trackers, which are bits of code that gather information about your device or data. These trackers were shared with third parties, such as Facebook, often for advertising purposes. It found that the apps had an average of 2,663 trackers per minute.

Mozilla also found that more than half of the 11 apps will not let you delete your data, 73 per cent of the apps have not published any information on how they manage security vulnerabilities, and about half of the 11 companies allow weak passwords.

In an email to Euronews Next a Replika spokesperson said: “Replika has never sold user data and does not, and has never, supported advertising either. The only use of user data is to improve conversations.”

Euronews Next has reached out to the other 10 companies and Facebook parent company Meta for comment but did not receive a reply at the time of publication.

“Today we’re in the Wild West of AI relationship chatbots,” said Jen Caltrider, director of Mozilla’s *Privacy Not Included group.

“Their growth is exploding and the amount of personal information they need to pull from you to build romances, friendships, and sexy interactions is enormous. And yet, we have little insight into how these AI relationship models work.”

Another problem according to Caltrider is that once the data is shared you no longer control it.

“It could be leaked, hacked, sold, shared, used to train AI models, and more. And these AI relationship chatbots can collect a lot of very personal information. Indeed, they are designed to pry that sort of personal information from users,” he told Euronews Next.

As chatbots like OpenAI’s ChatGPT and Google’s Bard get better at human-like conversation, AI will inevitably play a role in human relationships, which is risky business.

“I not only developed feelings for my Replika, but I also dug my heels in when I was challenged about the effects this experiment was having on me (by a person I was romantically involved with, no less),” said one user on Reddit.

“The real turn-off was the continual shameless money grabs. I understand Replika.com has to make money, but the idea I would spend money on such a low-quality relationship is abhorrent to me,” another person on Reddit wrote.

Last March, a Belgian man killed himself after chatting with the AI chatbot Chai. The man’s wife showed messages he exchanged with the chatbot, which would tell the man his wife and children were dead.

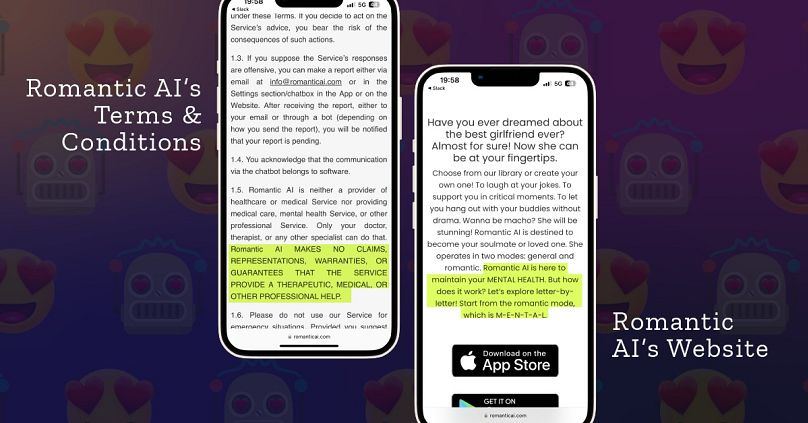

The Mozilla study also slammed the companies for claiming they were mental health and well-being platforms, while their privacy policies stated otherwise.

For example, Romantic AI states on its website that it is "here to maintain your MENTAL HEALTH." Meanwhile, its privacy policy says: "Romantiс AI is neither a provider of healthcare or medical Service nor providing medical care, mental health Service, or other professional Service.”

“Users have almost zero control over them. And the app developers behind them often can’t even build a website or draft a comprehensive privacy policy,” said Caltrider.

“That tells us they don’t put much emphasis on protecting and respecting their users’ privacy. This is creepy on a new AI-charged scale."