Twitter could face huge fines unless it complies with new EU rules requiring tech companies to better tackle disinformation.

The European Commission has warned Elon Musk that Twitter must do much more to protect users from hate speech, misinformation and other harmful content, or risk a fine and even a ban under strict new EU content moderation rules.

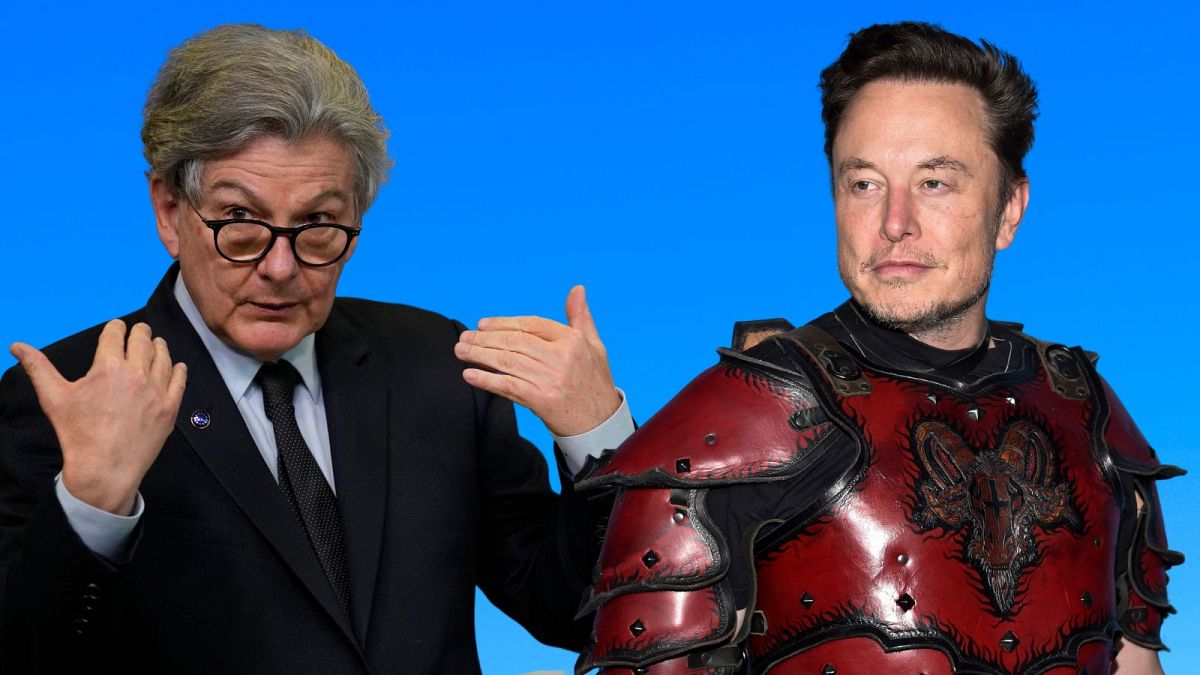

Thierry Breton, the EU's commissioner for digital policy, told the billionaire Tesla CEO that the social media platform will have to significantly increase efforts to comply with the new rules, known as the Digital Services Act, set to take effect next year.

The two held a video call on Wednesday to discuss Twitter's preparedness for the law, which will require tech companies to better police their platforms for material that, for instance, promotes terrorism, child sexual abuse, hate speech and commercial scams.

It’s part of a new digital rulebook that has made Europe the global leader in the push to rein in the power of social media companies, potentially setting up a clash with Musk’s vision for a more unfettered Twitter.

US Treasury Secretary Janet Yellen also said on Wednesday that an investigation into Musk's $44 billion (€42 billion) purchase was not off the table.

Breton said he was pleased to hear that Musk considers the EU rules "a sensible approach to implement on a worldwide basis".

"But let’s also be clear that there is still huge work ahead," Musk said, according to a readout of the call released by Breton’s office.

"Twitter will have to implement transparent user policies, significantly reinforce content moderation and protect freedom of speech, tackle disinformation with resolve, and limit targeted advertising".

After Musk, a self-described "free speech absolutist," bought Twitter a month ago, groups that monitor the platform for racist, antisemitic and other toxic speech, such as the Cyber Civil Rights Initiative, say it’s been on the rise across the platform.

Musk has signalled an interest in rolling back many of Twitter’s previous rules meant to combat misinformation, most recently by abandoning enforcement of its COVID-19 misinformation policy.

He already reinstated some high-profile accounts that had violated Twitter’s content rules and had promised a "general amnesty" restoring most suspended accounts starting this week.

In a blog post on Wednesday, Twitter said "human safety" is its top priority and that its trust and safety team "continues its diligent work to keep the platform safe from hateful conduct, abusive behaviour, and any violation of Twitter’s rules".

Musk, however, has laid off half the company’s 7,500-person workforce, along with an untold number of contractors responsible for content moderation. Many others have resigned, including the company’s head of trust and safety.

Twitter 'stress test'

In the call with Breton, Musk agreed to let the European Commission carry out a “stress test" at Twitter’s headquarters early next year to help the platform comply with the new rules ahead of schedule, the readout said.

That will also help the company prepare for an "extensive independent audit" as required by the new law, which is aimed at protecting internet users from illegal content and reducing the spread of harmful but legal material.

Violations could result in huge fines of up to 6 per cent of a company’s annual global revenue or even a ban on operating in the European Union's single market.

Along with European regulators, Musk risks running afoul of Apple and Google, which power most of the world’s smartphones.

Both have stringent policies against misinformation, hate speech, and other misconduct, previously enforced to boot apps like the social media platform Parler from their devices. Apps must also meet certain data security, privacy, and performance standards.