Systems considered high risk, such as facial recognition, will be subject to extra scrutiny, says the European Commission.

Brussels is proposing new rules to mitigate the risks of artificial intelligence (AI).

The draft regulation is designed to guarantee that AI-powered technology is safe and respects fundamental rights.

Systems considered high risk, such as facial recognition, will be subject to extra scrutiny, says the European Commission.

"Our proposed legal framework doesn’t look at AI as a technology as itself, instead it looks at what AI is used for and how it is used," said Margrethe Vestager, executive vice-president of the European Commission. "It takes a proportional and risk-based approach grounded in one simple logic: the higher the risk that a specific use of AI may cause to our lives, the stricter the rule."

Brussels wants safety standards to go hand in hand with investment and innovation in order to make the EU the "global hub for trustworthy AI".

Besides the norms, the EU plans to include €1 billion per year in AI investment. Brussels hopes that, with the help of the private sector and the €750 billion coronavirus recovery fund, which is not yet operational, the bloc will reach more than €20 billion annually this decade.

"AI is a means, not an end. It has been around for decades but has reached new capacities fuelled by computing power," said Thierry Breton, European commissioner for the internal market.

"This offers immense potential in areas as diverse as health, transport, energy, agriculture, tourism or cybersecurity."

Brussels is eager to replicate the General Data Protection Regulation (GDPR), a landmark piece of legislation on data protection and privacy that has inspired similar laws across the world.

The strategy has obvious geopolitical ambitions: the European Commission wants to design an alternative path to the "laissez-faire" approach of the United States and the aggressive state-sponsored tactics of China. The executive thinks that, by fostering reliable and predictable AI, Europe can gain a competitive edge over Washington and Beijing, although this thinking is yet to bear fruit.

The new framework is the consolidation of three years of work which began in 2018 with a high-level group of experts and a coordinated plan with EU member states, all of which was followed by numerous consultations, parliamentary debates and lobbying campaigns from the tech sector.

From permitted to unacceptable AI

The rulebook aims at promoting "sustainable, secure, inclusive and human-centric artificial intelligence through "proportionate and flexible rules".

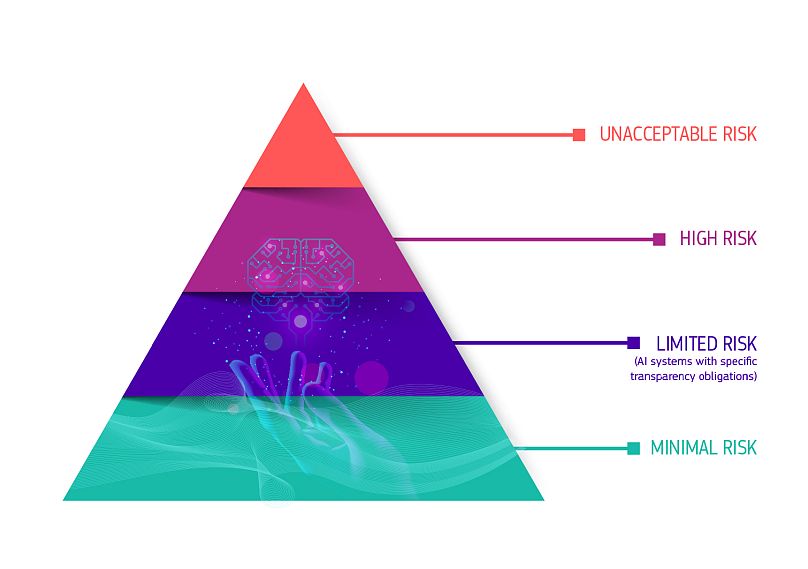

To this end, the European Commission has designed a pyramidal scheme that splits AI systems into four categories according to their potential risk:

- Minimal risk: the new rules won't apply to these AI systems because they represent only minimal or no risk for citizen’s rights or safety. Companies and users will be free to use them. Examples include spam filters and video games. The European Commission believes most AI applications will fall in this category.

- Limited risk: these AI systems will be subject to specific transparency obligations to allow users to make informed decisions, be aware they are interacting with a machine and let them easily switch off. Examples include chatbots (chat assistants).

- High risk: given their potentially harmful or damaging implications on people's personal interests, these AI systems will be "carefully assessed before being put on the market and throughout their lifecycle". The Commission expects high-risk systems to be found in a variety of fields, such as transport, education, employment, law enforcement, migration and healthcare. Examples include facial recognition, surgical robots and applications to sort CVs from job candidates.

- Unacceptable: the Commission will ban AI systems that represent "a clear threat to the safety, livelihoods and rights of people". Examples include social scoring by governments (like China's credit system), exploitation of vulnerabilities of children and use of subliminal techniques.

High-risk systems will be the ones most closely monitored. Once developed, they will have to undergo a conformity assessment, be registered in an EU database and sign a declaration of conformity. These AI products will always bear the CE marking, a widely-used logo in the EU that proves compliance with health, safety, and environmental protection standards. After the completion of all these steps, the product will be allowed to enter the market and reach consumers.

National authorities will then be in charge of market surveillance. If the high-risk AI system undergoes substantial changes (for example, an update), it will have to start the whole procedure from the very beginning.

To facilitate governance, the European Commission also proposes the creation of European Artificial Intelligence Board that will help national authorities to supervise and implement the new rules.

Like the GDPR, the new AI rulebook will be a regulation meaning that its provisions will apply directly and equally across the bloc. Every public and private actor inside the EU will have to follow them, and also those from outside who place AI products on the European market.

Companies that infringe the requirements for high-risk and unacceptable AI systems could face fines of up to €30 million or 6% of its total worldwide annual, whichever is higher.

Debate around biometrics

Even before Vestager and Breton presented the final draft, the rulebook was already a target of criticism. The most controversial aspect is the question of biometric identification systems, which are based on human characteristics such as facial traits, voice or emotions. These applications can be found when citizens unlock their smartphones or cross country borders.

According to the regulation, all remote biometric identification systems will be categorised high risk and will be subject to the aforementioned strict requirements. Moreover, the use of these systems by law enforcement will be altogether banned, falling into the "unacceptable" category.

However, the European Commission envisages "narrow exceptions" that would allow the use of biometrics in "targeted" police actions, such as the search for a missing child and the response to a terrorist threat. These uses will have to be authorised by a judicial body and be limited in time and reach.

Calling the regulation a "step in the right direction", the NGO European Digital Rights (EDRi) said the exceptions of biometric practices are "highly worrying" and could allow law enforcement to find loopholes.

"This leaves a worrying gap for discriminatory and surveillance technologies used by governments and companies. The regulation allows too wide a scope for self-regulation by companies profiting from AI. People, not companies need to be the centre of this regulation," Sarah Chander, an expert on AI at EDRi, wrote in a statement.

The Greens/EFA Group of the European Parliament voiced similar concerns regarding the exceptions.

"Biometric and mass surveillance, profiling and behavioural prediction technology in our public spaces undermines our freedoms and threatens our open societies. The European Commission's proposal would bring the high-risk use of automatic facial recognition in public spaces to the entire European Union, contrary to the will of the majority of our people," said MEP Patrick Breyer.

Meanwhile, the private sector welcomed the move from the Commission, but with caveats.

Praising the risk-based approach of the regulation, DIGITAL EUROPE, the trade association of digital companies in Europe, said that "the inclusion of AI software into the EU’s product compliance framework could lead to an excessive burden for many providers".

"After reading this Regulation, it is still an open question whether future start-up founders in ‘high risk’ areas will decide to launch their business in Europe," wrote director Cecilia Bonefeld-Dahl.