Two “groundbreaking” brain-computer interfaces are being tested on paralysed people who lost the ability to speak.

Artificial intelligence (AI) is helping speech-aid technology get closer to delivering speech at normal speeds with large vocabularies of more than 1,000 words.

Two recently developed brain-computer interfaces that have been described as “groundbreaking” are being tested on paralysed people who no longer can speak.

One patient, Pat Bennett, has amyotrophic lateral sclerosis (ALS), a rare condition which gets worse over time as the brain degenerates.

She has been fitted with tiny electrode implants by a research team at Stanford University.

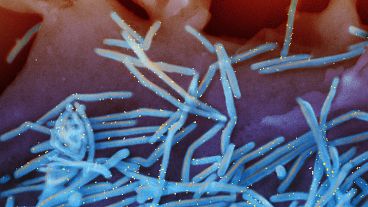

Mounted in her skull, the implants amplify the signals from the individual brain cells or neurons and send them out through the scalp. The amplified signals are then run through a machine learning software algorithm, which associates the neural activity of the brain activity with the individual phonemes of English.

The output is auto-corrected using the language model and placed on the screen.

It’s also read out with a standard text-to-voice program.

Computer software that translates signals from the brain into language has existed for some time, but it necessitated pre-arranged questions and answers, orchestrating conversations.

The new system however is designed to replicate normal speech using AI.

It can decode up to 125,000 English words at a 24 per cent error rate. When the system was based on a 50-word vocabulary it was found to have a 9 per cent error rate.

“It's a useful vocabulary that someone with severe paralysis could use to communicate essential needs,” said Dr Jaimie Henderson, professor of Neurosurgery at Stanford University.

Henderson believes that using more electrodes with higher channel counts will scale up the vocabulary dramatically.

Similar research is being done by researchers from the University of California San Francisco.

They placed an electro grid under the skull of a patient who has been unable to talk since she suffered a brain stem stroke 18 years ago.

“A reason that we like these is that they don't penetrate into the brain, and they cover a larger region of the brain, said Sean Metzger, one of the researchers at the University of California San Francisco (UCSF).

“So it's possible to record from more areas and the recordings are more stable, meaning they look the same day to day, in the sense they sit on top of the brain and they're also recording from tens of thousands of neurons at once, whereas electrodes will record from 1 to 3 neurons at once. So it's a different signal but still has a lot of information.”

The research team is also using avatars to show facial expressions.

While both studies have been published in the journal Nature and named as a “milestone” the technology is a long way from being used by patients at home.

The women in both studies need to be wired up to a computer system and they need to be observed by researchers.

Both the Stanford and the UCSF studies are aiming to recruit more participants in order to improve the software.

The researchers hope to eventually develop a discrete device that can sit inside the skull completely and connect wirelessly with the software and computers.

For more on this story, watch the video in the media player above.